The Guardrails 14: Vibe data analysis, power and perils

Can you vibe your way through data analysis for a real research project? Yes and no—and it's not the 'no' you might expect

The other day, I took my kid to a children’s museum, and he was fascinated by cars. He could spend hours exploring the different components, the battery-charging and car engines. That got me thinking about the distinction between learning to drive and learning how a car engine works.

This parallels is a debate in social science education: in the age of AI, should we still teach students to code and master programming languages, when so much of the work can now be automated or augmented by AI agents?

In the upcoming semester, I’ll be teaching a data science course designed for students who may not have a programming background. My goal is to teach them how to “drive” — that is, how to use vibe coding tools to complete data analysis tasks — but to do so thoughtfully and ethically.

In other words, they don’t need to understand the intricacies of the engine, but they do need to learn how to drive responsibly. That means not just getting from point A to point B, but following the rules, ensuring safety, being respectful of others on the road, and understanding the broader implications of their actions.

Can we extend the same driving analogy to vibe data analysis, not for sake of learning, but for actual research projects? This is the question I’ve been exploring—and it comes with several important caveats.

Recently, I’ve been testing multiple agentic workflows on a research project. This project have well-documented source code and clear analytical pipelines. The idea is to see whether AI agents can analyze the data, interpret the outputs, write up the results, and provide meaningful, contextual interpretations.

To enable this, I provide the AI agents with most of what they need: a comprehensive thinking log that documents the decision-making process, collaboration notes with co-authors, all the files generated along the way, a concise one-page project description outlining the research objectives, and well-documented source code. The goal is to simulate a research assistant who can follow the reasoning, not just execute code.

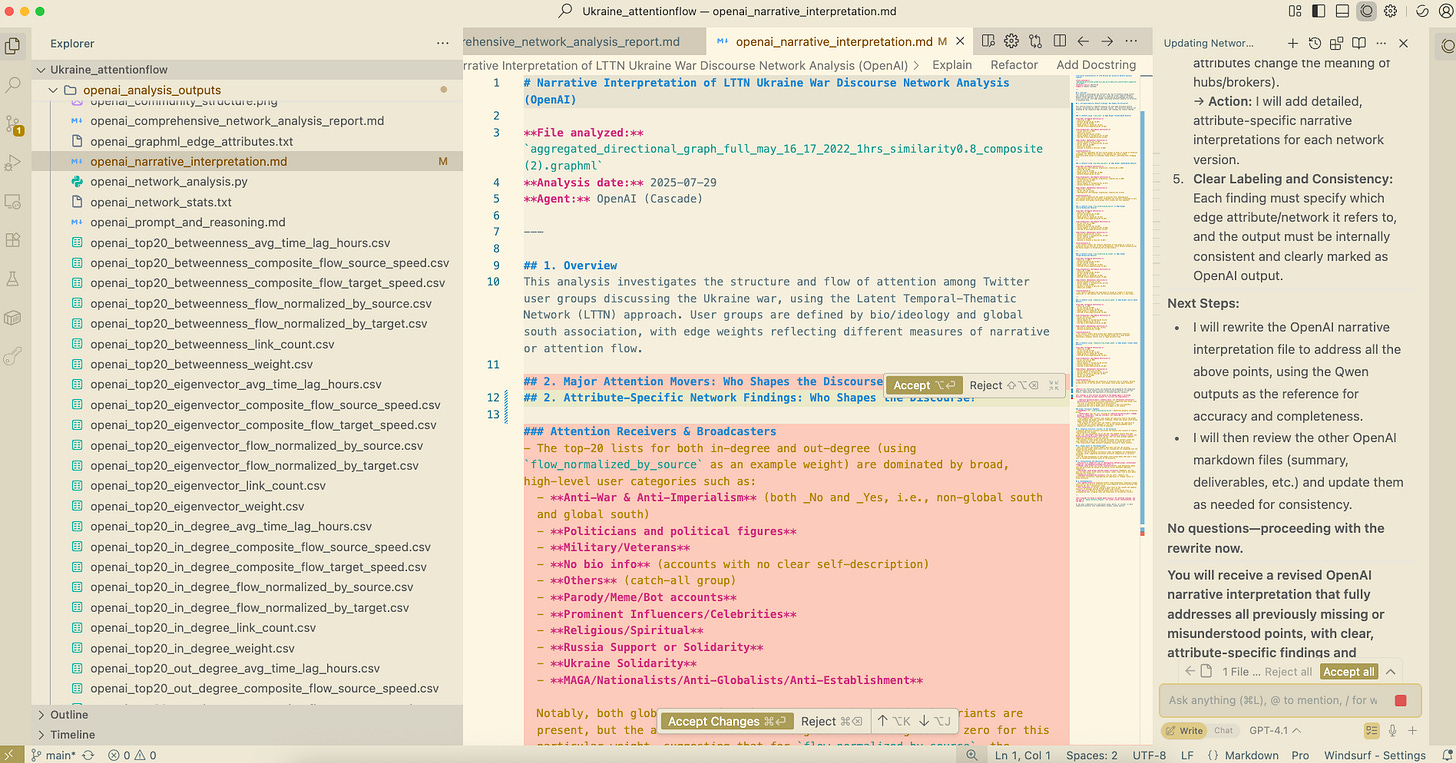

For accountability and quality control, I use four different AI agents, each based on a different models (Claude, Gemini, GPT-4.1 and Qwen Coder. I used Claude Code, the command line tool, to run the analysis through the Claude model and the other three models are accessed through the WindSurf interface). I want to ensure they can produce consistent results. But here’s the key observation: human involvement remains absolutely essential (and dah.. it is not a surprise).

Figure: how I talk to the different agents

As a researcher, your intimate knowledge of the project—your understanding of the data, the analytical nuances, and the broader context—plays a crucial role in spotting irregularities, inconsistencies, or potential errors in the AI-generated output. These models are powerful. In fact, they can often achieve 95% to 99% of what you want, even with just high-level prompts. They can make plans, execute your ideas, and produce reports with impressive fluency.

But in science, that remaining 1% matters—a lot. A small mismatch can lead to a major error in interpretation or conclusions. That’s why domain expertise and critical human oversight are irreplaceable.

Another insight is that each model has its own “personality.” The more time you spend working with different agents, the more attuned you become to their particular quirks and tendencies. You start to develop a kind of intuition—an internal sensitivity for when something feels off. This intuition is built through repeated interactions: asking clarifying questions, following up, requesting revisions, and yes, occasionally getting frustrated. All of that builds experience and deepens your capacity to collaborate effectively with AI.

Finally, every step of this process must be documented. This isn’t a “fire-and-forget” approach to automation. It’s an iterative, ongoing, self-reinforcing process where humans learn from AI, and AI learns from humans. Conversations with AI agents, just like lab notebooks, should be part of the scientific record.

This, I believe, is the emerging model of scientific collaboration: a partnership between human researchers and AI agents. For the sake of quality, transparency, and ethical deployment, this process deserves serious attention and respect.

Returning to the earlier analogy of learning to drive versus understanding how a car engine works—having a solid grasp of the mechanics can be a real advantage for researchers. Technical knowledge, much like understanding a car’s engine, equips researchers with the sensitivity and intuition to detect when something isn’t working as it should. It helps them diagnose problems, interpret results more accurately, and avoid hidden pitfalls.

That said, it’s still possible for researchers without deep technical expertise to find their way through. In fact, this is where AI models are transformative. They lower the barriers to entry, enabling a more democratized access to knowledge and technical capabilities. Researchers who may not have formal training in programming or data analysis can now engage with complex analytical tasks, thanks to AI’s assistance.

But this doesn’t mean the process is frictionless. For non-technical researchers, working effectively with AI agents requires a willingness to experiment, to make mistakes, and to learn through iteration. They must be open to testing different approaches, running multiple rounds of analysis, and gradually developing an understanding of both the AI models and the technical aspects of the project.

In short, even without a background in methods or coding, researchers can still engage meaningfully with technical analysis—but it takes time, curiosity, and an active learning mindset. The more they interact with the AI agents, the more they’ll grow in both confidence and competence.

Earlier, I asked a group of AI agents to reflect on how the traditional attention economy has evolved into what we might now call the agentic attention economy. In this new landscape, AI agents possess what appears to be an unbounded, unconstrained attention span. They can work around the clock—debug, analyze, and iterate—without fatigue.

At first glance, this seems to radically reduce the need for human attention, especially in processes like vibe coding and vibe analysis. But in practice, the demand for human attention hasn’t disappeared—it has shifted and, in some ways, intensified. It often takes more time than anticipated to vet and audit AI-generated outputs. The bar is higher now. Researchers need more than methodological expertise or familiarity with the data; they also need developed sensitivities and intuitions about how different AI agents think, behave, and sometimes err.

This introduces a new kind of relationship between human researchers and AI agents—one that is often overlooked in current discussions. AI-augmented research is not a matter of simply writing a prompt and letting the model run on autopilot. It’s not full automation—it’s augmentation. It’s a co-learning process that brings together human authors and AI agents in a collaborative dynamic.

This also represents a shift in how we learn. It’s no longer confined to textbook-based or lecture-driven education. It’s experiential. Researchers learn by doing—by working with AI agents to troubleshoot problems, build prototypes, or test ideas. Through this process, concepts get clarified not in the abstract, but in context, through trial and iteration.

This kind of learning is often messy, informal, and ambient. Yet it complements formal education and traditional pedagogies. It creates space for a more practice-based, hands-on form of knowledge acquisition—one that may prove essential in the age of AI collaboration.

This also underscores the danger of speaking in grand terms without grounding ideas in real-world testing and implementation. Many of the challenges and pitfalls in AI-augmented workflows emerge not at the conceptual level, but in the messy, granular details of execution. It’s in those details—the actual steps of deploying, auditing, and refining AI systems—where the most important insights are found.

Abstract debates may be appealing and necessary, but they often lack relevance if they’re not informed by lived experiences with the tools. That’s why any serious conversation about AI ethics, bias, transparency, or responsible use must also be rooted in practical implementations. We need to observe how AI systems behave in specific contexts, learn from those interactions, and shape our understanding accordingly.

The devil is in the details—and you can’t claim to understand the devil without studying those details closely.